In October, IBM released its first Artificial Intelligent Unit (AIU) on-chip system.

This is an application-specific integrated circuit (ASIC) designed to train and run deep learning models that require large-scale parallel computing more quickly and efficiently.

AIU: designed for modern AI computing

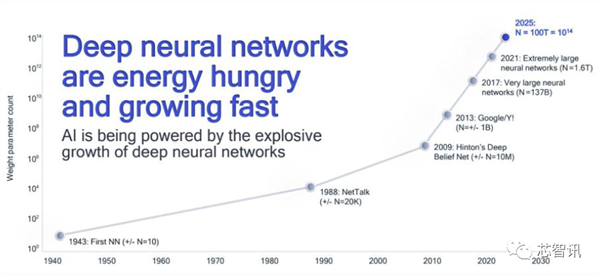

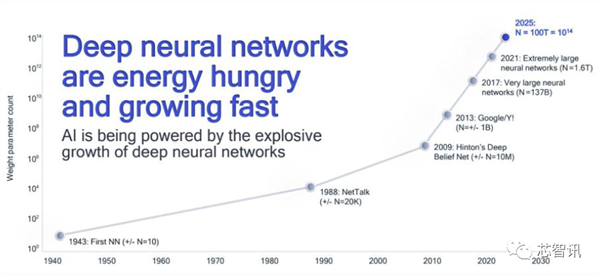

Over the years, the industry has mostly used cpus and gpus to run deep learning models, but the number of AI models is growing exponentially.

At the same time, deep learning model is becoming more and more huge, with billions or even trillions of parameters, the need for more and more computing power, and CPU, GPU and other traditional architecture of CHIP AI computing growth has encountered a bottleneck.

The demand of deep neural network for computing power is increasing rapidly

According to IBM, the deep learning model has traditionally relied on a combination of cpus and GPU coprocessors to train and run the model.

The flexibility and precision of the CPU makes it ideal for general-purpose software applications, but it is at a disadvantage when it comes to training and running deep learning models that require large-scale, parallel AI operations.

The GPU was originally developed for rendering graphical images, but it has since discovered the advantages of using it in AI computing.

However, both cpus and gpus were designed before the Deep Learning Revolution, and their efficiency gains now lag behind the exponential growth of deep learning in computing power, what the industry really needs is a general-purpose chip optimized for the type of matrix and vector multiplication operations to carry out in-depth learning.

For the past five years, the IBM Research AI Hardware Center has focused on developing the next generation of chips and AI systems, hoping to increase the efficiency of AI Hardware by 2.5 times a year, and be able to train and run AI models at 2029 speeds 1,000 times faster than 2019.

The latest AIU chip is the first from IBM to be tailored to modern AI statistics.

According to IBM, the AIU was designed and optimized to accelerate the matrix and vector computations used in the deep learning model. AIU can solve computationally complex problems and perform data analysis much faster than the CPU can handle.

So how does IBM AIU optimize for deep learning? Answer: “Approximate computation”+ “Simplify AI workflow”

So how does IBM AIU optimize for deep learning? The answer is“Approximate computation”+ “Simplify AI workflow”.

Embrace low accuracy, using approximate calculation

Historically, many AI computations have relied on high-precision 64-bit and 32-bit floating-point operations. IBM believes that AI computing does not always require such precision.

It has a term that reduces the accuracy of traditional calculations-“Approximate computation”. In its blog, IBM explains the basics of using approximate computing:

“Do we need this kind of accuracy for common deep learning tasks? Do our brains need high-resolution images to recognize family members or cats? When we enter a text thread to search, do we need relative ranking accuracy for the 50,002 most useful replies versus the 50,003 most useful replies? The answer is that many of the tasks, including these examples, can be done by approximation.”

Based on this, IBM pioneered a technique called approximate computing that can be scaled down from 32-bit floating-point computing to a hybrid 8-bit floating-point (HFP8) computing format that contains a quarter of the information. This simplified format greatly reduces the amount of numerical computation required to train and run AI models without sacrificing accuracy.

A leaner bit format also reduces another drag on speed: less data needs to be moved into and out of memory, which means less memory for running AI models.

IBM has incorporated approximate computing techniques into the design of its new AIU chip, making the AIU chip's precision requirements considerably lower than those required by the CPU. Low accuracy is essential to achieve high computational density in the new AIU hardware accelerator.

AIU computes using a mixed 8-bit floating point (FP8) instead of the 32-bit floating point or 16-bit floating point operations typically used for AI training. The low-precision calculation makes the chip run 2 times faster than FP16 calculation, and provides similar training results.

While low-precision computing is necessary to achieve higher density and faster computation, the accuracy of the deep learning (DL) model must be consistent with that of the arbitrary-precision arithmetic.

Simplify AI workflow

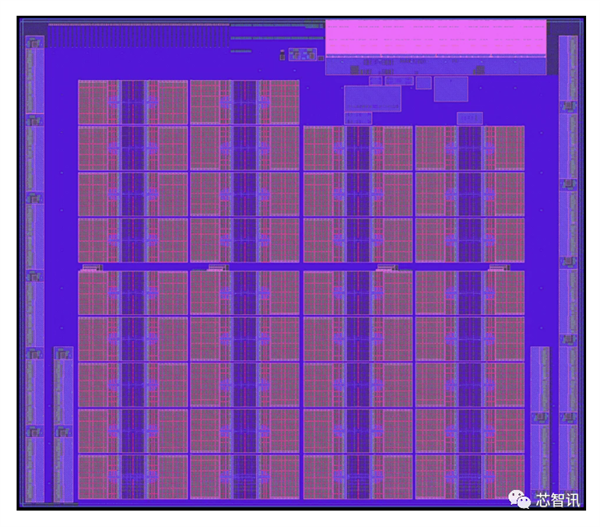

Since most AI computing involves matrix and vector multiplication, the IBM AIU chip architecture has a simpler layout than multi-purpose cpus.

IBM AIU is also designed to save a lot of energy by sending data directly from one computing engine to another.

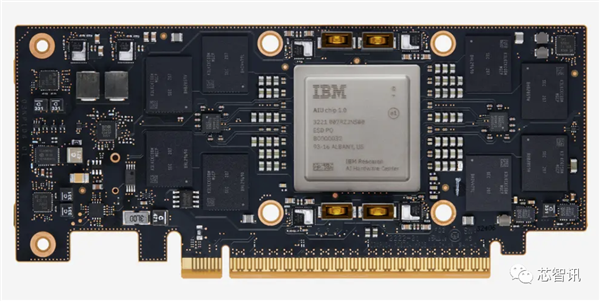

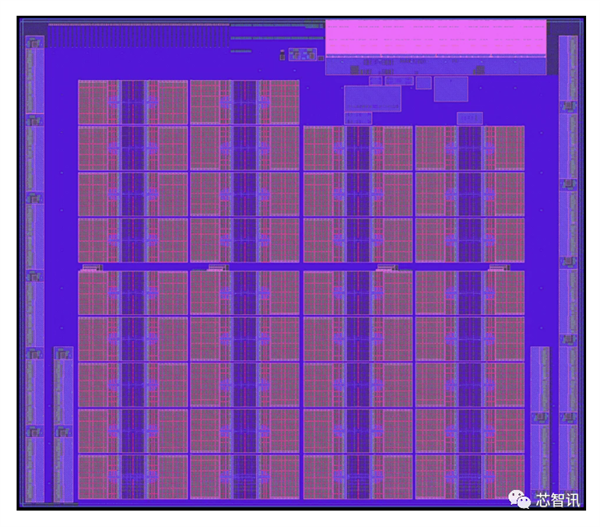

According to IBM, its AIU chip is a complete on-chip system, based on an expanded version of the proven AI accelerator built into IBM's previous Telum Chip (7nm process) , it uses a more advanced 5-NM process, has 32 processing cores and contains 23 billion transistors.

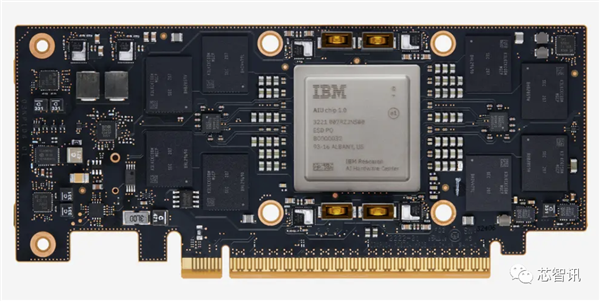

The IBM AIU is also designed to be as easy to use as a graphics card. It can plug into any computer or server with a PCIe slot.

“Deploying AI to categorize cats and dogs in photos is an interesting academic exercise,” IBM said. But it will not solve the pressing problems we face today. If we want Ai to solve real-world complexities -- like predicting the next Hurricane Ian, or whether we're headed for a recession -- we need industrial-grade hardware at the enterprise level. Our AIU brings that vision one step closer.”

How is IBM AIU doing?

IBM did not disclose more technical information about its AIU chips on its website. However, we can get a sense of IBM's performance by reviewing its initial 2021 demo at the International Solid State Circuits Conference (ISSCC) , where it presented the performance results of its early 7 nm chip designs.

The IBM prototype for the conference presentation was not a 32-core, but an experimental 4-core 7 nm AI chip that supported FP16 and hybrid FP8 formats for training and reasoning deep learning models.

It also supports the INT4 and INT2 formats for extended reasoning. The 2021 Lindley Group Newsletter, which includes a summary of the prototype's performance, reported IBM's demonstration:

At peak speeds, the 7nm chip achieves 1.9 teraflops (TF/W) per second using HFP 8.

Using INT4 reasoning, the experimental chip achieves 16.5 TOPS/w, which is superior to the high-pass low-power Cloud AI module.

Considering that the IBM AIU is an extended version of the test chip and the process has been upgraded to 5 nm, it is expected that the overall energy efficiency will be further improved as the number of cores increases from 4 cores to 32 cores, its overall peak computing power is expected to rise more than eightfold.

Analysts at Forbes say the lack of information makes it impossible to compare IBM's AIU with the GPU currently used for AI computing, but the chip is expected to cost between $1,500 and $2,000.

Pre:10 times silicon-based chip performance, the Chinese company to tackle graphene technology: is expected to break the monopoly

Next: 没有了!